Lambda: activations) Queues and the conditional operator # Bad - droupout outside the conditional evaluated every time!ĭo_activations = tf.nn.dropout(activations, 0.7)

#Online graph builder tensorflow code

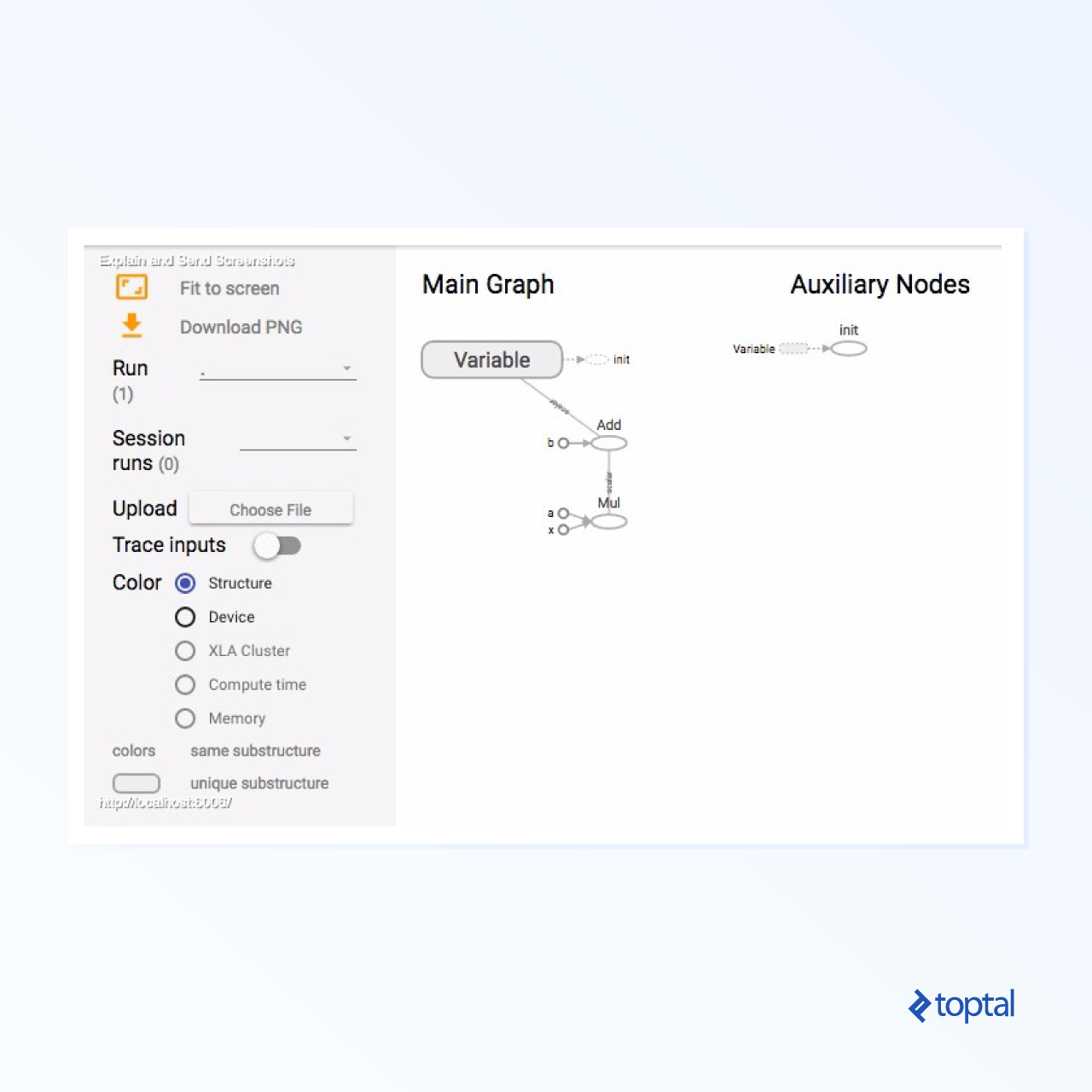

So this code works correctly: # Good - dropout inside the conditionalīut this will run the dropout even if `is_train = False`. The `tf.cond` operation is like a box of laziness, but it protects only what’s inside. This is also true if we mutate something as result of a condition such as the case with batch normalization. If we put it behind a conditional operator, we would expect it to only run at evaluation time. Let’s say we have an expensive operation we would only like to run during evaluation. There are two sources of complexity that make the picture less rosy though – laziness and queues. Now our serialized models work for training and evaluation. The big advantage is that now we have all of the logic in one graph, for instance, we can see it in TensorBoard. is_train = tf.placeholder(tf.bool)ĭropout = tf.nn.dropout(activations, 0.7) TensorFlow does have a way to encode different behaviors into a single graph – the ` tf.cond` operation. And maybe serving… But definitely training and evaluation. So we still want one graph, but we want to use it for both training and evaluation. In addition, training and evaluation don’t use the same graph (even if they share weights) and require awkward coordination to mesh together. This is not always practical with larger repositories and in any case requires some operations effort. Even a small change (like changing a variable name) will break the model in production so to revert to an older model version, we also need to revert to the older code. This approach has a big drawback however – the serialized graph can no longer be used without the code that produced it. TensorFlow even ships with tools like ` tf.variable_scope` that make creating different graphs easier. A dropout example might look like this: if is_training:Īctivations = tf.nn.dropout(activations, 0.7) This is the method that we usually find in the documentation. Creating multiple graphs with the same code So what can we do? We can try to create more than one graph.

What’s done cannot be undone, so to speak. TensorFlow will just add a suffix to the operation name: Even if we try to overwrite with tf.Session() as sess: TensorFlow operations implicitly create graph nodes, and there are no operations to remove nodes. TensorFlow graphs in Python are append-only.

#Online graph builder tensorflow update

Can’t we just build a graph and update it as we go? The are a couple of ways to do this, and picking the right one is not straightforward. Turns out we need 3-5 different graphs in order to represent our one model. While the conceptual model is the same, these use cases might need different computational graphs.įor example, if we use TensorFlow Serving, we would not be able to load models with Python function operations.Īnother example is the evaluation metrics and debug operations like ` tf.Assert` – we might not want to run them when serving for performance reasons. For example we could re-train an existing model or apply the model to a large amount of data in batch mode. Serving – on-demand prediction for new data.Evaluation – calculating various metrics during training on a different data set to evaluate training quality or for cross validation.Often done periodically as new data arrives. Training – finding the correct weights or parameters for the model given some training data.Typically a model will be used in at least three ways: One reason is that the “Computation Graph” abstraction used by TensorFlow is a close, but not exact match for the ML model we expect to train and use. Training small models is easy, and we mostly do this at first, but as soon as we get to the rest of the pipeline, complexity rapidly mounts. Large production pipelines in TensorFlow are quite difficult to pull off.

0 kommentar(er)

0 kommentar(er)